|

| Someday You'll Find It ... The Jumplist Connection! |

So all this talk of Windows 7 Jumplists (eg here and here) got me thinking - What if you needed to look up an AppID and didn't have access to the Internet? Also, Is there any way we can import a list of AppIDs so we can then access them from some future scripts (eg for processing Jumplists)?

I then had my "Aha!" moment (no, nothing to do with that 80s band), and thought "SQLite!"

SQLite also has the benefit of being cross-platform - so an AppID List generated on SIFT should work on a PC etc. By using a standard database, we can also ensure that our fearless forensicator lab uses a common set of (unchanging) source data.

So how exactly can we do this (using the SIFT VM)?

1. We create an empty SQLite Database.

2. We parse the ForensicsWiki AppID HTML page for data.

3. We populate the Database

Creating The Empty Database

Creating an (empty) SQLite AppID Database is pretty straight forward. I opted to do this part manually using SIFT's "sqlite3" command line tool.

1. At a terminal command line, I typed:

"sqlite3 WinAppIDs.sqlite"

2. At the resultant "sqlite3" prompt, I typed (without the quotes):

"CREATE TABLE IF NOT EXISTS WinAppIDs (AppID TEXT, AppDescrip TEXT, DateAdded NUMERIC, Source TEXT);"

3. At the next "sqlite3" prompt, I typed (without the quotes):

"CREATE UNIQUE INDEX IF NOT EXISTS AppIdx ON WinAppIDs(AppID, AppDescrip);"

4. Finally, at the "sqlite3" prompt, I typed (without the quotes):

".quit"

There should now be a "WinAppIDs.sqlite" database file in the current directory (eg "/home/sansforensics"). This file contains a "WinAppIDs" table which has AppId, AppDescription, DateAdded and Source fields (just like the ForensicsWiki page tables).

Additionally, I have hacked/cheated a bit and created a Unique Index called "AppIdx". The purpose of the index is to ensure that we cannot insert duplicate entries. That is, we can't insert an entry with the same AppID AND the same AppDescription as an existing one. You wouldn't think this would be a problem with the AppId tables right? Wrong! In the tables there are entries such as:

Different AppIds, Same Descriptions

b8c29862d9f95832 Microsoft Office InfoPath 2010 x86

d64d36b238c843a3 Microsoft Office InfoPath 2010 x86

Same AppIDs, Different Descriptions

23646679aaccfae0 Adobe Reader 9.

23646679aaccfae0 Adobe Reader 9 x64

So rather than calling a SELECT query to check before every INSERT (there's 400+ AppIDs to insert), we'll just make the INSERT fail (via "AppIdx") when there's already an existing entry with the same AppID and AppDescription. For more on SQLite indexing read here. Specifically it says, "If the UNIQUE keyword appears between CREATE and INDEX then duplicate index entries are not allowed. Any attempt to insert a duplicate entry will result in an error."

Parsing the ForensicsWiki AppID HTML page for data and Populating the Database

Parsing/extracting the AppIDs from HTML is easy once you find out somebody's already written a CPAN package for that exact purpose! Its called "HTML::TableExtract" and you can read all about it here.

Additionally, the folks at ForensicsWiki have also included a "Source" link for each AppID. To extract the link we can use "HTML::LinkExtor". You can read about it here.

As we have seen in previous posts, we can use the Perl "DBI" package to interface with an SQLite Database. So knowing all this, we should now be able to insert our newly extracted data.

To simplify this exercise, we will extract the data and then enter it into the database from one script ("4n6wiki-parser.pl").

Incidentally, the StackOverflow website is a great searchable resource for Perl questions - this was where I not only heard about the previously mentioned packages but also saw example code of their use. Awesome!

Finally(!), we should write a short query script ("4n6wiki-query.pl") so that we can search our SQLite database by either AppID or AppDescrip.

Now let's take a look at what this monkey passes off for code ...

"4n6wiki-parser.pl" Code

#CODE BEGINS ON NEXT LINE

#!/usr/bin/perl -w

# Perl script to parse http://www.forensicswiki.org/wiki/List_of_Jump_List_IDs and import it into an existing SQLite Database

use strict;

use Getopt::Long;

use HTML::TableExtract;

use HTML::LinkExtor;

use DBI;

my $version = "4n6wiki-parser.pl v2012-03-27";

my $help = 0;

my $filename = "";

my $database = "";

GetOptions('help|h' => \$help,

'file=s' => \$filename,

'db=s' => \$database);

if ($help || $filename eq "" || $database eq "")

{

print("\nHelp for $version\n\n");

print("Perl script to parse http://www.forensicswiki.org/wiki/List_of_Jump_List_IDs and import it into an existing SQLite Database\n");

print("\nUsage: 4n6wiki-parser.pl [-h|help] [-file filename] [-db database]\n");

print("-h|help .......... Help (print this information). Does not run anything else.\n");

print("-file filename ... Copy of HTML file to be parsed.\n");

print("-db database ..... Target SQLite Database.\n");

print("\nExample: 4n6wiki-parser.pl -file List_of_Jump_List_IDs.html -db WinAppIDs.sqlite\n");

exit;

}

print "\nRunning $version\n\n";

open(my $htmlfile, "<".$filename) || die("Unable to open $filename for parsing\n");

my @lines = <$htmlfile>; # extract each line to a list element

my $html_string = join(' ', @lines); # join list elements into one big happy scalar string

close($htmlfile);

my $tblextract = HTML::TableExtract->new(keep_html => 1, headers => [qw(AppID Application Date Source)] );

$tblextract->parse($html_string);

my $lnkextr = HTML::LinkExtor->new();

my @bigarray; # will be a all table cells merged into one [row][cell] type array

my $rowcount = 0; # running count of rows in all tables

foreach my $tbl ($tblextract->tables)

{

my @rows = $tbl->rows;

my @cols = $tbl->columns;

print "Extracted Table Size = ".scalar(@rows)." x ".scalar(@cols)."\n";

for my $rownum (0 .. (@rows - 1))

{

# Ass-ume always 4 columns, last one (3) contains link info, col 2 contains date eg 8/22/2011

for my $colnum (0 .. (@cols - 1))

{

if ($colnum == (@cols - 2) )

{

#reformat date into form yyyy-mm-dd

my $date = $tbl->cell($rownum, $colnum);

$date =~ /(\d+)\/(\d+)\/(\d+)/;

my $year = $3;

my $month = $1;

my $day = $2;

if ($day < 10)

{

$day = "0".$day;

}

if ($month < 10)

{

$month = "0".$month;

}

my $newdate = $year."-".$month."-".$day;

$bigarray[$rowcount][$colnum] = $newdate;

}

elsif ($colnum == (@cols - 1))

{

# Extract a link entry eg "http://social.technet.microsoft.com/Forums/" for col 3

$lnkextr->parse($tbl->cell($rownum, $colnum));

for my $link_tag ( $lnkextr->links )

{

my ($tag, %links) = @$link_tag;

foreach my $key (keys %links)

{

$bigarray[$rowcount][$colnum] = $links{$key};

last; # only do it for one link then bail out

}

}

}

else

{

#Record each of the other column fields for this row ie col 0, 1, 2

$bigarray[$rowcount][$colnum] = $tbl->cell($rownum, $colnum);

}

}

$rowcount = $rowcount + 1;

}

}

print "Number of Rows Extracted from HTML = $rowcount\n";

my $db = DBI->connect("dbi:SQLite:dbname=$database","","") || die( "Unable to connect to database\n" );

my $sth;

for my $currow (0 .. ($rowcount-1))

{

my $id = $bigarray[$currow][0];

my $descrip = $bigarray[$currow][1];

my $date = $bigarray[$currow][2];

my $source = $bigarray[$currow][3];

$sth = $db->prepare_cached("INSERT INTO WinAppIDs (AppID, AppDescrip, DateAdded, Source) VALUES (?, ?, ?, ?)"); #or die "Couldn't prepare statement: ".$db->errstr;

$sth->execute($id, $descrip, $date, $source); #or die "Couldn't execute statement: ".$sth->errstr;

}

$sth->finish;

$db->disconnect;

print "$database Update complete ...\n"

# END CODE

"4n6wiki-parser.pl" Code Summary

Before you use this script, ensure that you have installed the following Perl packages: HTML::TableExtract, HTML::LinkExtor, DBI (eg type "sudo cpan HTML::TableExtract" etc.).

The first section calls "GetOptions" to parse the user's input arguments and then the following section is the Help printout.

We then read in the user specified HTML file into one long scalar variable ("$html_string").

Next we create a new HTML::TableExtract object to parse the table data. We use the argument "keep_html => 1" so we can later parse any web links in the table (using HTML::LinkExtor). The other argument, "headers => [qw(AppID Application Date Source)]" tells HTML::TableExtract the column headings so it can figure out how to extract data.

Note: I just used the first word of each column heading and it seemed to work OK. I don't think you can specify something like "Application Description" for a heading because the whitespace will confuse HTML::TableExtract. If they ever change the column headings to use the same first word (eg "AppID" and "AppID Description"), we're in trouble.

Anyhow, next we call the "parse" function of HTML::TableExtract to do all the heavy lifting and extract the data.

Now we create a new HTML::LinkExtor object to extract any HTML links in the table. We then proceed to loop through each row/column of each table that HTML::TableExtract has found (currently, there are 10 tables). We store the result in a huge array of arrays ("bigarray[rownum][colnum]").

For half of the columns (ie "AppId" and "Application Description"), we will just copy the data straight into "bigarray". The other 2 columns ("Date Added" and "Source") require a bit of massaging before entry (heheh).

ForensicsWiki lists the dates in the U.S. format of month/day/year (I DARE GU to say it's a better format! LOL) with no preceding zeros for single digits. SQLite uses the yyyy-mm-dd format in it's time calculations. So, just in case we want to sort later by date, our script has to re-arrange the date before storing it in "bigarray". We use a regular expression to extract the various parts of the date and then zero pad them if required before re-assembling them into the SQLite format. The regular expression code is:

"$date =~ /(\d+)\/(\d+)\/(\d+)/;"

In the line before this, "$date" was assigned the value from the date cell (eg "8/22/2011"). The "\d+"s indicate a field of multiple digits. The surrounding "( )"s indicate we are extracting the various values (in order) to $1, $2, $3. We also need to "\" the date separators "/" so they don't confuse the regular expression delimiters. More information on Perl Regular expressions is available here.

I found the basic code for the Link Extraction here. However, we call the HTML::LinkExtor's "parse" function with the table cell content (not the whole row). I found it quite difficult to initially understand this snippet, so I will try to explain using our actual code below.

$lnkextr->parse($tbl->cell($rownum, $colnum));

for my $link_tag ( $lnkextr->links )

{

my ($tag, %links) = @$link_tag;

foreach my $key (keys %links)

{

$bigarray[$rowcount][$colnum] = $links{$key};

last; # only do it for one link then bail out

}

}

To get the results of the HTML::LinkExtor's "parse" call, we have to call the "$lnkextr->links" method. According to the CPAN documentation, this returns data in the form:

"[$tag, $attr => $url1, $attr2 => $url2,...]".

The enclosing "[ ]"s denote an array containing the "$tag" scalar variable and what looks like a hash list ("$attr => $url1, $attr =>$url2").

Theoretically, there should only be one "$link_tag" result for our case and therefore, only one for ("my $link_tag") loop iteration.

In the loop, we now see: "my ($tag, %links) = @$link_tag;"

The right hand hand side, "@$link_tag" is saying treat this "$link_tag" scalar as a reference to an array (hence the "@"). This array will be the one containing the "$tag" and the hash list mentioned previously.

The left hand side assigns values for the various parts of the array. So we end up with "$tag" and a hash list array (now called "%links").

The next step is to iterate through "%links" and for the first key/URL it finds, store that link in "bigarrray".

For more information on Perl References see here.

OK, now we should have all the data we need in "bigarray" and we also have an SQLite database file ("WinAppIDs.sqlite") containing an empty table ("WinAppIDs"). Time to feed the machine!

As we've seen in previous posts, we first call DBI's "connect" to interface with the Database. If it doesn't work we quit and nothing gets inserted.

Next we loop through each "row" of "bigarray" and store the column values in temporary scalars ($id, $descrip, $date, $source). We then prepare our SQLite INSERT statement:

$sth = $db->prepare_cached("INSERT INTO WinAppIDs (AppID, AppDescrip, DateAdded, Source) VALUES (?, ?, ?, ?)"); #or die "Couldn't prepare statement: ".$db->errstr;

We're using "prepare_cached" because it apparently minimises resources for our repeated INSERTs (seriously?!) compared to a normal "prepare".

The "?" arguments are placeholders for our temporary values which get assigned in the next line.

$sth->execute($id, $descrip, $date, $source); #or die "Couldn't execute statement: ".$sth->errstr;

Note: I've commented out the ("die") error handling parts for "prepare_cached" and "execute" because if we fail to INSERT, it could be due to a duplicate. We should keep looping though "bigarray" because there might be new non-duplicate information later in "bigarray" (eg we might be updating an existing database).

After we've finished looping through "bigarray", we call "finish" and "close" and print out an "Update Complete" message.

"4n6wiki-query.pl" Code

# CODE BEGINS ON NEXT LINE

#!/usr/bin/perl -w

# Perl script to query an existing SQLite Database for Windows AppID info

# Intended to be used in conjunction with the 4n6wiki-parser.pl script.

use strict;

use Getopt::Long;

use DBI;

my $version = "4n6wiki-query.pl v2012-03-27";

my $help = 0;

my $appid = "";

my $descrip = "";

my $database = "";

GetOptions('help|h' => \$help,

'appid=s' => \$appid,

'descrip=s' => \$descrip,

'db=s' => \$database);

if ($help || $database eq "" || ($descrip eq "" && $appid eq "") || ($descrip ne "" && $appid ne "") )

{

print("\nHelp for $version\n\n");

print("Perl script to query an existing SQLite Database for Windows AppID info\n");

print("Intended to be used in conjunction with the 4n6wiki-parser.pl script.\n");

print("\nUsage: 4n6wiki-query.pl [-h|help] [-appid APPID] [-descrip Description] [-db database]\n");

print("-h|help .......... Help (print this information). Does not run anything else.\n");

print("-appid APPID ..... Search Database for match(es) for this APPID.\n");

print("-descrip Description ... Search Database for match(es) \"like\" this Description.\n");

print("-db database ..... Target SQLite Database.\n");

print("\nExamples: \n");

print("4n6wiki-parser.pl -appid 3dc02b55e44d6697 -db WinAppIDs.sqlite\n");

print("4n6wiki-parser.pl -descrip \"Adobe Flash\" -db WinAppIDs.sqlite\n");

print("Note: Listing BOTH -appid and -descrip will not work / prints this message\n\n");

exit;

}

print "\nRunning $version\n\n";

my $db = DBI->connect("dbi:SQLite:dbname=$database","","") || die( "Unable to connect to database\n" );

my $sth;

if ($appid ne "")

{

$sth = $db->prepare("SELECT AppID, AppDescrip FROM WinAppIDs WHERE AppID=?") or die "Couldn't prepare statement: ".$db->errstr;

$sth->execute($appid) or die "Couldn't execute statement: ".$sth->errstr;

PrintHeadings($sth);

PrintResults($sth);

if ($sth->rows == 0)

{

print "No Matching AppIDs found!\n";

}

else

{

print $sth->rows." Matches returned\n";

}

}

elsif ($descrip ne "")

{

my $likestr = "%".$descrip."%";

$sth = $db->prepare("SELECT AppID, AppDescrip FROM WinAppIDs WHERE AppDescrip LIKE ?") or die "Couldn't prepare statement: ".$db->errstr;

$sth->execute($likestr) or die "Couldn't execute statement: ".$sth->errstr;

PrintHeadings($sth);

PrintResults($sth);

if ($sth->rows == 0)

{

print "No Matching Descriptions found!\n";

}

else

{

print $sth->rows." Matches returned\n";

}

}

$sth->finish;

$db->disconnect;

# End Main

sub PrintHeadings

{

my $sth = shift;

# Print field headings

for (my $i = 0; $i <= $sth->{NUM_OF_FIELDS}-1; $i++)

{

if ($i == $sth->{NUM_OF_FIELDS} - 1)

{

print $sth->{NAME}->[$i]."\n"; #last item adds a newline char

}

else

{

print $sth->{NAME}->[$i]." | ";

}

}

}

sub PrintResults

{

my $sth = shift;

my @rowarray;

# Prints row by row / field by field

while (@rowarray = $sth->fetchrow_array() )

{

for (my $i = 0; $i <= $sth->{NUM_OF_FIELDS}-1; $i++)

{

if ($i == $sth->{NUM_OF_FIELDS} - 1 )

{

print $rowarray[$i]."\n"; #last field in row adds newline

}

else

{

if ($rowarray[$i])

{

print $rowarray[$i]." | ";

}

else

{

print " | "; # field returned could be UNDEFINED, just print separator

}

}

}

}

}

# CODE ENDS

"4n6wiki-query.pl" Code Summary

This script is very similar to our previous "ffparser.pl" script. It will use DBI to run SELECT queries against a user nominated SQLite Database. There will be 2 types of queries - a search by AppID and a search for LIKE terms in AppDescrip. We won't use an exact match for AppDescrip because the analyst might not know the exact description (eg they just know it's for CCleaner).

As usual, it starts off with "GetOptions" and the Help printout sections.

We then call DBI's "connect" and one of the versions of "prepare" (depending on the user's input arguments).

Next we call "PrintHeadings" and "PrintResults" (we cut and pasted these functions from "ffparser.pl").

Finally, we call "finish" and "disconnect".

Testing

To test our scripts we will save a copy of the current ForensicsWiki AppID page as "List_of_Jump_List_IDs.html". We will then edit/remove the entry for the "Win32 cmd.exe" in the last row of the last table and re-save it as "List_of_Jump_List_IDs-withoutCMD.html".

Now we can simulate an update by first running "4n6wiki-parser.pl" with "List_of_Jump_List_IDs-withoutCMD.html" and then again with "List_of_Jump_List_IDs.html". After our first run, our SQLite Database (created previously) should not contain our "Win32 cmd.exe" entry and then after the second run, it should contain our "Win32 cmd.exe" entry.

OK, here we run "4n6wiki-parser.pl" with "List_of_Jump_List_IDs-withoutCMD.html". (ie no "Win32 cmd.exe" entry)

sansforensics@SIFT-Workstation:~$ ./4n6wiki-parser.pl -file List_of_Jump_List_IDs-withoutCMD.html -db WinAppIDs.sqlite

Running 4n6wiki-parser.pl v2012-03-27

Extracted Table Size = 53 x 4

Extracted Table Size = 31 x 4

Extracted Table Size = 10 x 4

Extracted Table Size = 87 x 4

Extracted Table Size = 50 x 4

Extracted Table Size = 16 x 4

Extracted Table Size = 66 x 4

Extracted Table Size = 30 x 4

Extracted Table Size = 2 x 4

Extracted Table Size = 55 x 4

Extracted Table Size = 7 x 4

Number of Rows Extracted from HTML = 407

WinAppIDs.sqlite Update complete ...

sansforensics@SIFT-Workstation:~$

OK now we run a search for the "cmd" term in our "WinAppIDs.sqlite" database.

sansforensics@SIFT-Workstation:~$ ./4n6wiki-query.pl -descrip "cmd" -db WinAppIDs.sqlite

Running 4n6wiki-query.pl v2012-03-27

AppID | AppDescrip

6728dd69a3088f97 | Windows Command Processor - cmd.exe (64-bit)

1 Matches returned

sansforensics@SIFT-Workstation:~$

As expected, it only returns the value for the 64bit cmd.exe.

Now let's run "4n6wiki-parser.pl" with "List_of_Jump_List_IDs.html" (ie includes "Win32 cmd.exe" entry).

sansforensics@SIFT-Workstation:~$ ./4n6wiki-parser.pl -file List_of_Jump_List_IDs.html -db WinAppIDs.sqlite

Running 4n6wiki-parser.pl v2012-03-27

Extracted Table Size = 53 x 4

Extracted Table Size = 31 x 4

Extracted Table Size = 10 x 4

Extracted Table Size = 87 x 4

Extracted Table Size = 50 x 4

Extracted Table Size = 16 x 4

Extracted Table Size = 66 x 4

Extracted Table Size = 30 x 4

Extracted Table Size = 2 x 4

Extracted Table Size = 55 x 4

Extracted Table Size = 8 x 4

Number of Rows Extracted from HTML = 408

DBD::SQLite::st execute failed: columns AppID, AppDescrip are not unique at ./4n6wiki-parser.pl line 114.

DBD::SQLite::st execute failed: columns AppID, AppDescrip are not unique at ./4n6wiki-parser.pl line 114.

DBD::SQLite::st execute failed: columns AppID, AppDescrip are not unique at ./4n6wiki-parser.pl line 114.

[... Lines Edited Out]

DBD::SQLite::st execute failed: columns AppID, AppDescrip are not unique at ./4n6wiki-parser.pl line 114.

WinAppIDs.sqlite Update complete ...

sansforensics@SIFT-Workstation:~$

OK so we are getting some errors about duplicate entries but the last table seems to have an extra entry 8 x 4 (not 7 x 4). This looks promising!

We'll run a query and check ...

sansforensics@SIFT-Workstation:~$ ./4n6wiki-query.pl -descrip "cmd" -db WinAppIDs.sqlite

Running 4n6wiki-query.pl v2012-03-27

AppID | AppDescrip

6728dd69a3088f97 | Windows Command Processor - cmd.exe (64-bit)

bc0c37e84e063727 | Windows Command Processor - cmd.exe (32-bit)

2 Matches returned

sansforensics@SIFT-Workstation:~$

YAY! The update worked!

Let's run a quick query using the bc0c37e84e063727 AppId (for "Win32 cmd.exe") and see what results we get:

sansforensics@SIFT-Workstation:~$ ./4n6wiki-query.pl -appid bc0c37e84e063727 -db WinAppIDs.sqlite

Running 4n6wiki-query.pl v2012-03-27

AppID | AppDescrip

bc0c37e84e063727 | Windows Command Processor - cmd.exe (32-bit)

1 Matches returned

sansforensics@SIFT-Workstation:~$

OK we found our latest entry.

Just for fun, let's check how many entries we have via "sqlite3".

sansforensics@SIFT-Workstation:~$ sqlite3 WinAppIDs.sqlite

SQLite version 3.6.16

Enter ".help" for instructions

Enter SQL statements terminated with a ";"

sqlite>

sqlite> select count (AppId) from WinAppIDs;

408

sqlite>

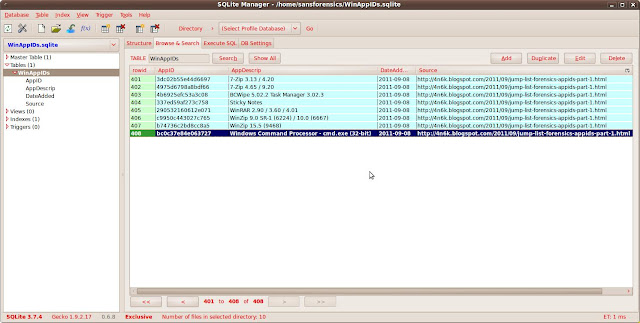

Now let's see if that corresponds with the SQLite Manager Firefox plugin.

|

| Opening our Database with the "SQLite Manager" Firefox Plugin |

Note(1): The HTML links have been extracted into the table Source column.

Note(2): There are 408 records reported ie the same as what our previous "sqlite3" query reported. The latest record is our "Win32 cmd.exe" record.

Our last check (with "sqlite3") is to see if our database's DateAdded field is usable by SQLite (we'll only grab the first 3 records). So here's those records with our inputted date format:

sqlite> select AppId, AppDescrip, DateAdded from WinAppIDs limit 3;

65009083bfa6a094|(app launched via XPMode)|2011-08-22

469e4a7982cea4d4|? (.job)|2011-08-22

b0459de4674aab56|(.vmcx)|2011-08-22

sqlite>

And here's the same results with the date field transformed into "number of days since now":

sqlite> select AppId, AppDescrip, julianday('now') - julianday(DateAdded) from WinAppIDs limit 3;

65009083bfa6a094|(app launched via XPMode)|220.419525995385

469e4a7982cea4d4|? (.job)|220.419525995385

b0459de4674aab56|(.vmcx)|220.419525995385

sqlite>

So we can see from 22 August 2011 until (now) 29 March 2012 has been calculated as ~220 days.

A manual check: 9 remaining days of August + 30 Sept days + 31 Oct days + 30 Nov days + 31 Dec days + 31 Jan days + 29 Feb days + 29 Mar days = 220

Coolio! Our date field is usable by SQLite.

Summary

OK so everything seems to work. We have extracted various fields from the ForensicsWiki AppId web page and then entered that data into an SQLite Database. We have then successfully queried that database for some information.

The code from "4n6wiki-query.pl" would be easy to cut and paste into existing scripts that currently only output the AppID thus possibly saving analysts some time.

I'm not sure if this will be of use to any forensicators but it was interesting from a Perl programming perspective. If you think you might find it useful, please leave a comment.